Artificial intelligence has become an integral part of banking development. The use of AI in banks allows for the automation of processes, fraud detection and the customisation of services. Technologies such as machine learning and data analytics not only speed up operations, but can also contribute to increased security.

It is worth noting that, according to the results of the McKinsey Global Survey report ‘The State of AI in 2023’, up to 75 per cent of companies in the financial sector plan to increase their investment in AI over the next few years. This means that banks recognise the huge potential of AI, but at the same time they face a challenge. How to effectively manage this technology to be compliant with upcoming regulations?

AI Act – challenges for the financial sector

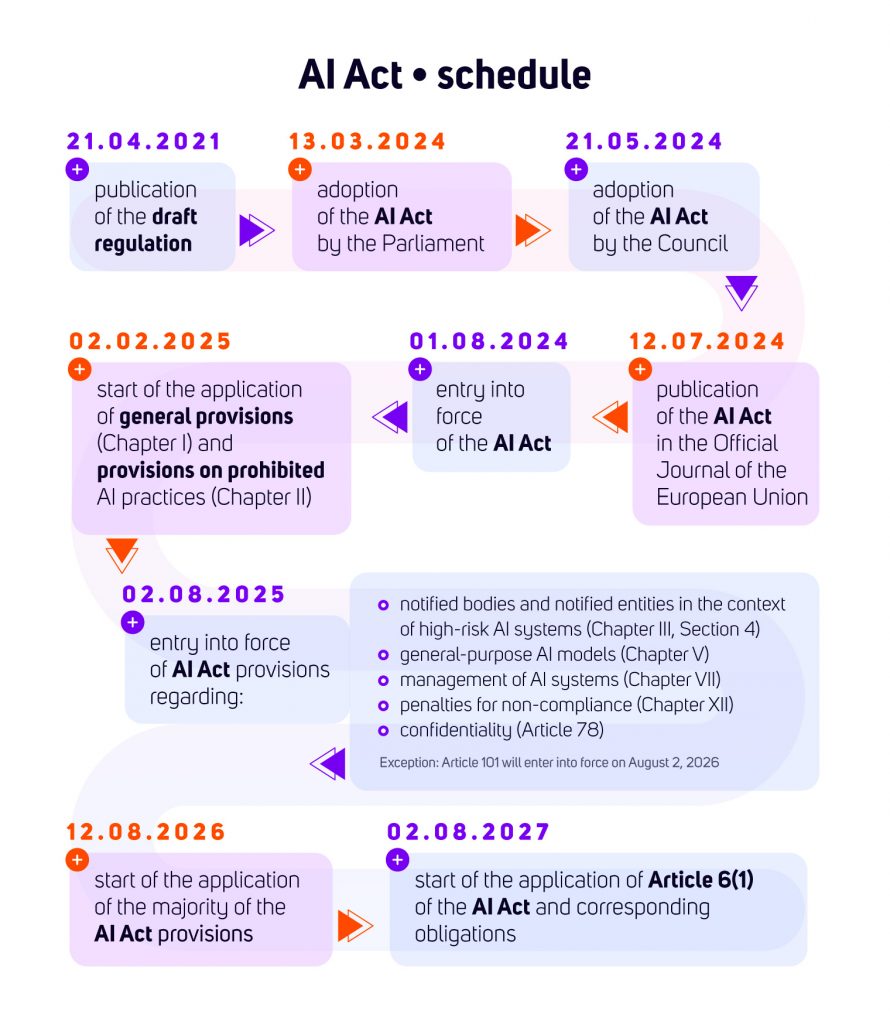

The AI Act is an EU regulation that introduces uniform rules for the implementation and monitoring of AI systems. In March 2024, the EU Parliament adopted its final version. These regulations aim to establish clear rules and standards for the use of AI in Europe.

The time to prepare for the AI Act is extremely short. Although the regulations will take partial effect from August 2025, full implementation is planned for February 2026. This means that banks have less than two years to prepare for the new regulations, which will have a direct impact on all their processes using AI. In the context of large organisations, which need to map all AI models, assess their risks, improve documentation and conduct appropriate audits, this time is severely limited.

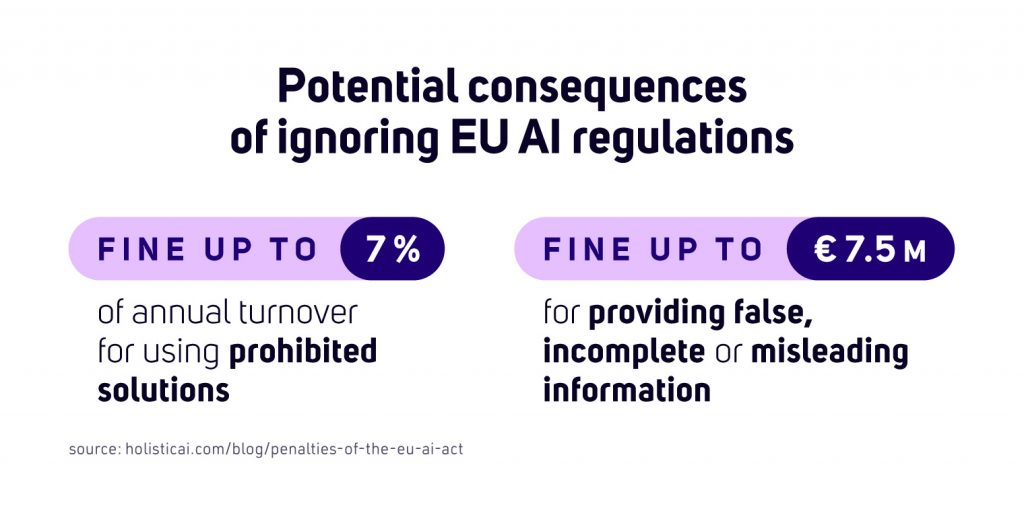

According to the EY Global Survey report, as many as 60% of financial institutions admit that they are not fully prepared for the upcoming AI Act-related regulations. Clearly, this is a serious problem, as not being adequately prepared for the upcoming regimes can lead to severe consequences. Operational chaos is one of the main risks – banks may find it difficult to implement AI projects and, in the worst case scenario, may be forced to suspend them in order not to violate the law.

The risk of penalties is another important aspect – if financial institutions fail to audit their AI systems, they could face fines or other financial sanctions. Equally important is the reputational risk. Regulators may publicly point out violations, which could seriously damage the image of banks.

A proactive approach to AI Governance

A proactive approach to AI Act implementation is the key to success. It is better to start now, developing a plan to comply with the new legislation, rather than waiting until the last minute. It is a similar situation to the implementation of RODO, where companies that addressed privacy policies earlier went through the process smoothly. The latecomers, on the other hand, clashed with hectic last-minute preparations just before the deadline.

The new regulations impose a number of obligations, such as:

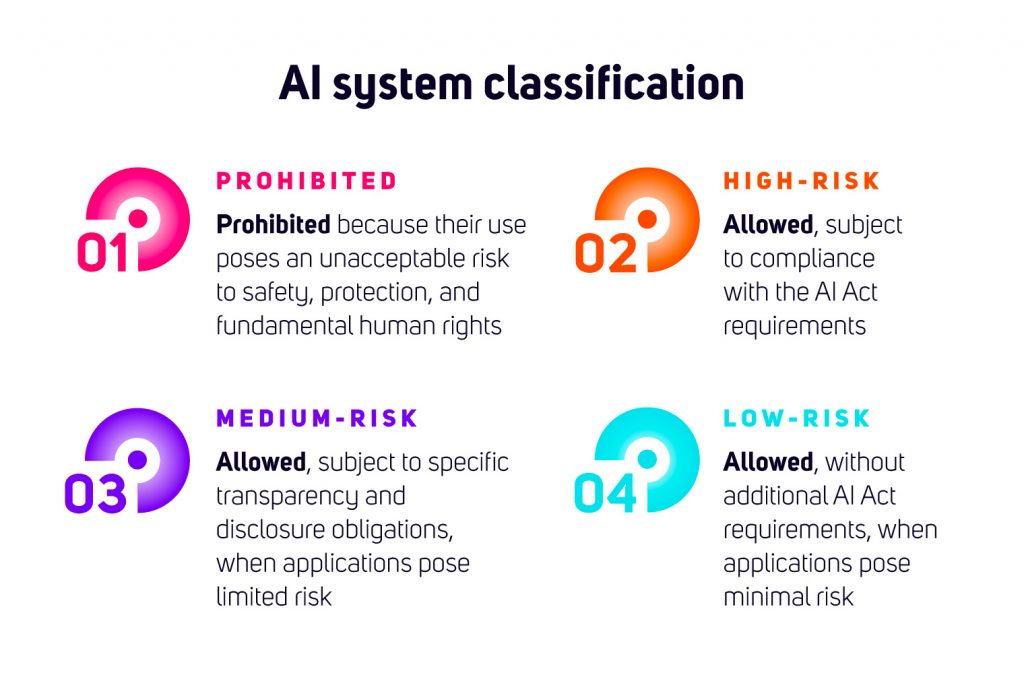

- Categorisation of AI systems – the need to assess whether a system is classified as ‘high risk’, requiring additional transparency and compliance.

- New security standards – AI in banking must operate in accordance with ethical principles, minimise the risk of algorithmic bias and ensure that decisions made by algorithms are fully auditable.

- Technical documentation and reporting – financial institutions implementing AI will be required to keep detailed technical documentation and report to regulators.

- Transparency of algorithms – requiring decisions made by AI to be understandable and verifiable to counter so-called ‘black-box’ (black-box AI).

- Risk monitoring – an obligation to conduct risk analyses of deployed AI systems and their potential impact on users.

Prohibited AI systems

Under the AI Act, the European Union imposes restrictions on certain AI practices that pose too great a risk to society and individuals, outlawing their use in commerce.

Prohibited AI systems include those that use manipulative techniques or assess people in a discriminatory manner. An example of this is behavioural manipulation, where AI subliminally influences a person’s decisions, e.g. prompting them to purchase a product they were previously unconvinced about.

Another prohibited case is the use of people’s sensitive characteristics, such as age, disability, ethnicity or sexual orientation, in a way that leads to manipulation or discrimination. In the context of banks, this means that financial institutions cannot use AI to assess their customers on the basis of data unrelated to their financial situation, such as denying credit to people with certain social habits.

Although banks can use AI to personalise services, e.g. for product recommendations, they must avoid any actions that could be perceived as manipulation.

An example of a boundary is when AI triggers a certain emotion in the customer to get them to buy. It is important to follow ethical rules when using AI, treating the customer with full respect and transparency.

The ethical importance of these rules is crucial. Even if banks are not directly involved in the use of prohibited systems, they may find themselves in a situation where their marketing or scoring solutions breach ethical boundaries. AI Governance must therefore take special care to ensure that any tools used do not affect customers in a way that violates their rights.

What does it take to trust a decision made by AI?

In order to trust a decision made by AI, there are several key aspects that need to be taken care of to ensure not only compliance with legal requirements, but also to build trust with users.

- The first element is explainability – AI cannot be a black box. We need to understand why the model has made a certain decision. In a bank, this means that a customer or employee must be able to get an explanation, for example about a credit decision.

- Another pillar is fairness – i.e. avoiding bias and discrimination. Models must treat different groups fairly and any differences must be substantively justified.

- Robustness is also an important aspect – AI should be stable, even when it encounters new, unexpected situations such as a change in the data or attempts to manipulate the input data.

- To ensure trustworthiness, there also needs to be transparency – full disclosure of the process of creating and implementing AI models. Users must be aware of how their data is used and on what basis decisions are made.

- Finally, privacy, i.e. the protection of user data, plays a key role. Models must comply with data protection regulations, e.g. RODO, and ensure that customer data is not at risk of leakage or misuse.

Furthermore, continuous improvement of models is extremely important – AI is a process that requires constant monitoring and adaptation to changing market conditions.

AI Governance – how can banks ensure regulatory compliance?

AI in banking carries great potential, but requires responsible management to ensure compliance with new regulations, security and ethics. Preparing for the AI Act is a key step to avoid the risks of non-compliance.

IBM watsonx.governance – secure AI Governance

With upcoming regulations, financial institutions need to implement effective AI oversight mechanisms. IBM watsonx.governance is a platform developed by IBM that allows AI systems to be fully controlled and compliant with legal and ethical requirements. It helps to ensure:

- compliance – by combining data with risk controls and collecting model metadata for audits, it supports AI transparency, policy compliance and standards,

- risk management – enables monitoring of performance, data protection and other risks that may affect the financial institution,

- lifecycle management – facilitates the oversight of predictive ML and generative AI models through the full lifecycle, providing workflows and monitoring tasks.

Find out more about the watsonx.governance platform here.

We will soon have another article on our blog detailing the challenges of managing large AI models and their effective control under AI Governance.

Find out more about how to make your organisation compliant with the new AI Act requirements – contact us!