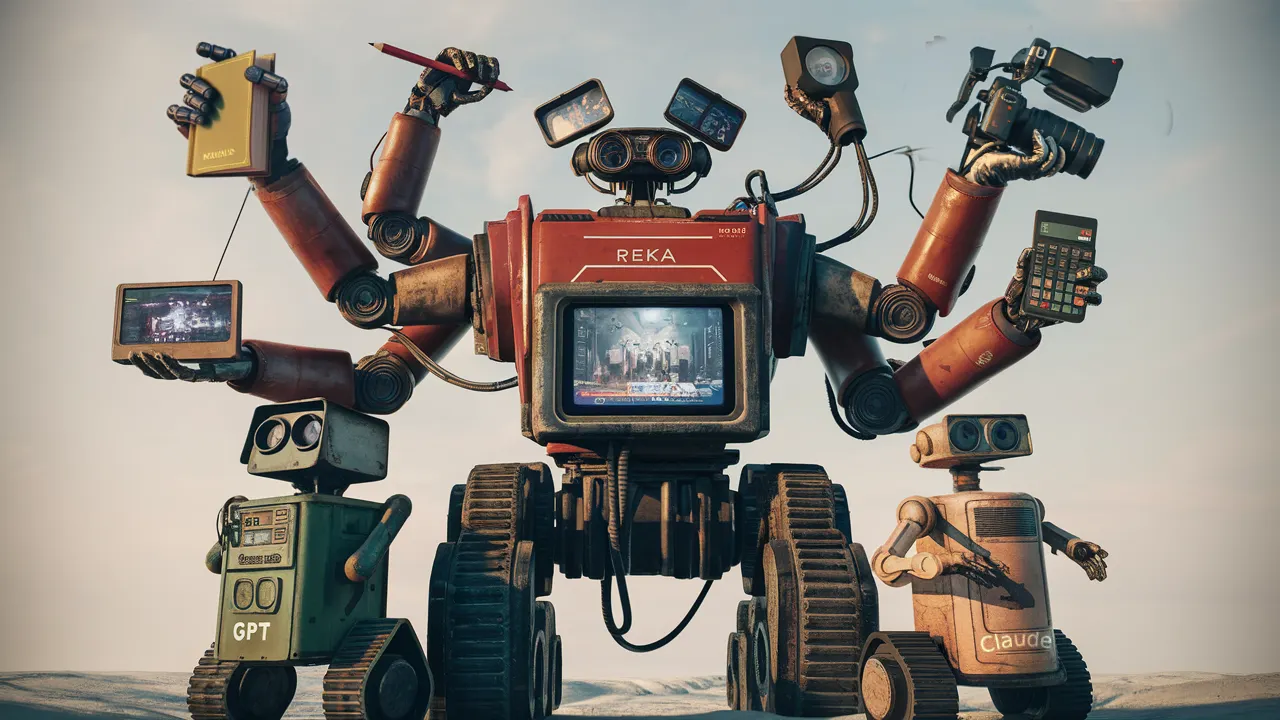

Meet Copilot Workspace’s new AI tool, which can “go from idea to code to software in natural language.“

According to the announcement, GitHub hopes to reimagine the entire developer experience: “Copilot Workspace represents a radically new way of building software with natural language, and is expressly designed to deliver — not replace — developer creativity, faster and easier than ever before.” By making software simpler and easier to build, the tool lets professional developers focus on bigger-picture systems instead of being mired in lines of code, GitHub explained. The company also wants Copilot Workspace to help beginner and hobbyist coders. Radhika Rajkumar

ZDNET

Apple renews talks with OpenAI for iPhone generative AI features, Bloomberg News reports

Apple Inc has renewed discussions with OpenAI about using the startup's generative AI technology.Read the article onreuters.comApple is making moves in AI – will we see AI features rolled out for new iPhones?

Apple Inc (AAPL.O), opens new tab has renewed discussions with OpenAI about using the startup’s generative AI technology to power some new features being introduced in the iPhone later this year, Bloomberg News reported on Friday. The companies have begun discussing terms of a potential agreement and how OpenAI features would be integrated into Apple’s next iPhone operating system, iOS 18, the report said, citing people familiar with the matter. Reuters

OpenELM: An Efficient Language Model Family

OpenELM uses a layer-wise scaling strategy to efficiently allocate parameters within each layer of the transformer model.See more atmachinelearning.apple.comAnd more importantly, the Cupertino tech giants, released a new language model – OpenELM (Open Efficient Language Models.)

The reproducibility and transparency of large language models are crucial for advancing open research, ensuring the trustworthiness of results, and enabling investigations into data and model biases, as well as potential risks. To this end, we release OpenELM, a state-of-the-art open language model. OpenELM uses a layer-wise scaling strategy to efficiently allocate parameters within each layer of the transformer model, leading to enhanced accuracy. For example, with a parameter budget of approximately one billion parameters, OpenELM exhibits a 2.36% improvement in accuracy compared to OLMo while requiring 2 times fewer pre-training tokens. Apple Machine Learning Research

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/24347781/STK095_Microsoft_03.jpg)

Microsoft launches Phi-3, its smallest AI model yet

Microsoft launched the next version of its lightweight AI model Phi-3 Mini.Read the article ontheverge.comMicrosoft advances its AI lineup with the first of the three small models the company plans to release.

Phi-3 Mini measures 3.8 billion parameters and is trained on a data set that is smaller relative to large language models like GPT-4. It is now available on Azure, Hugging Face, and Ollama. Microsoft plans to release Phi-3 Small (7B parameters) and Phi-3 Medium (14B parameters). Parameters refer to how many complex instructions a model can understand. Emilia David

TheVerge

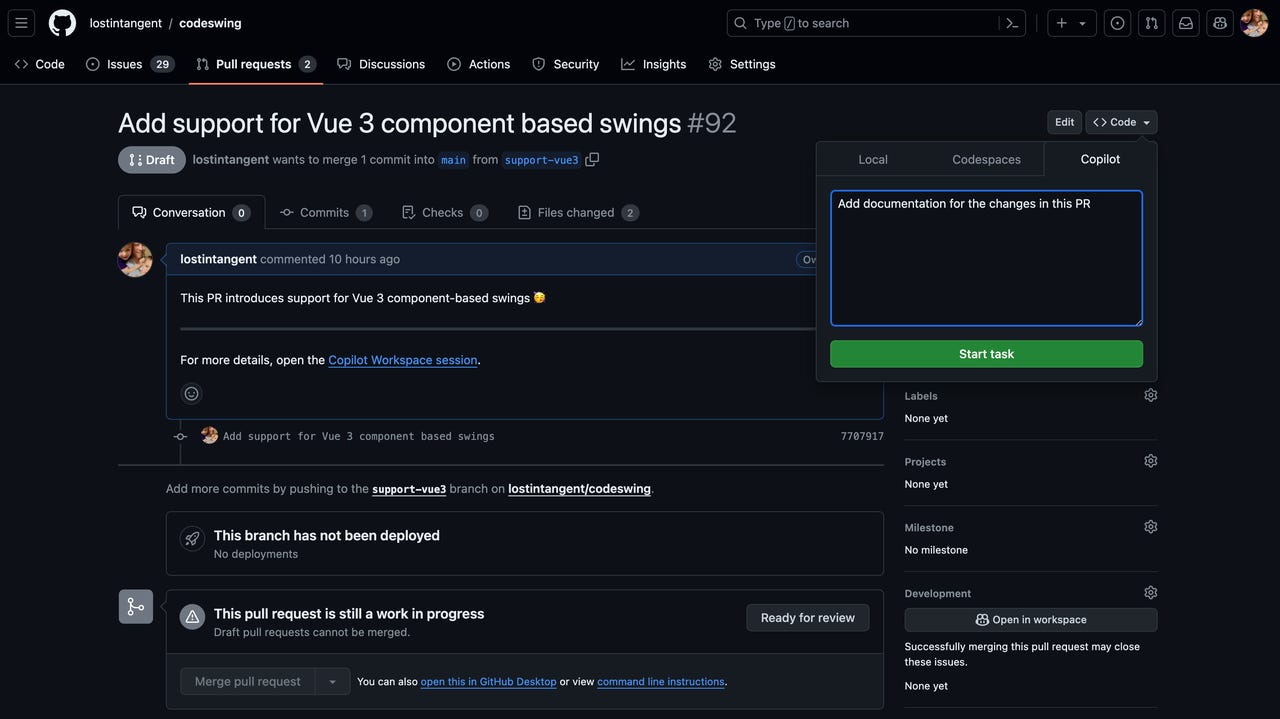

New AI Model Reka Rises to Challenge ChatGPT, Claude and Llama-3

A lesser-known startup has produced a top-performing AI model, Reka Core.Read the article ondecryt.coSmaller players also want their piece of the AI cake – Reka AI has just released Reka Core, an interesting, reportedly top-performing AI model.

Reka Core is the company’s largest and most capable model to date. And Reka AI—referencing its own tests—says it stands up well against many much larger, well-funded models. In a research paper aggregating the results of several synthetic benchmarks, Reka claims its Core model can compete with AI tools from OpenAI, Anthropic, and Google. One of the key metrics is the MMMU, the Massive Multi-discipline Multimodal Understanding and Reasoning benchmark. It’s a dataset designed to test the capabilities of large language models (LLMs) in multimodal understanding and reasoning at a level comparable to human experts. Jose Antionio Lanz

decrypt.co

https://llama.meta.com/llama3/

Now available with both 8B and 70B pretrained and instruction-tuned versions to support a wide range of applications.Read the article onllama.meta.com/llama3Last but not least: a new version of Llama was released by Meta:

Llama 3 models take data and scale to new heights. It’s been trained on our two recently announced custom-built 24K GPU clusters on over 15T token of data – a training dataset 7x larger than that used for Llama 2, including 4x more code. This results in the most capable Llama model yet, which supports a 8K context length that doubles the capacity of Llama 2. Meta